Who bears the costs of AI innovation?

Why public leaders must examine not just what AI can do, but who it serves—and who it leaves behind.

At Public Servants, we think deeply about how technology shapes public systems and public trust. This reflection builds on that work—connecting the lessons of AI’s rapid evolution with the values and governance approaches we help public-serving organizations strengthen every day.

Why this question matters right now

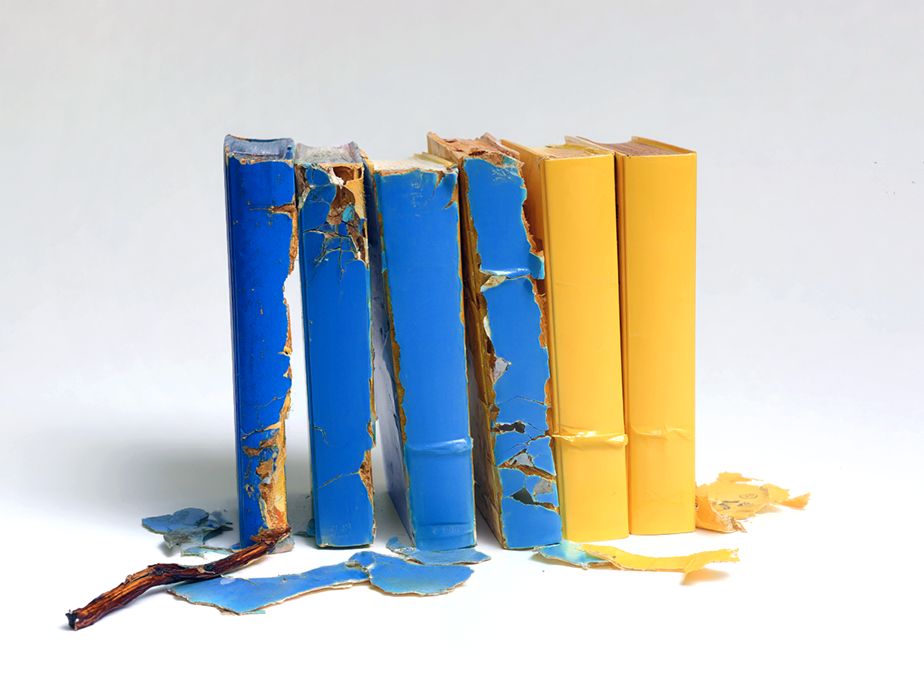

Uneven wear reveals uneven impact. Systems rarely erode evenly—and neither do the costs of innovation.

AI is often framed around possibility: what we could accelerate, what we might solve, how much more efficient or insightful our systems could become. Curiosity drives that excitement. And curiosity is a good thing.

But in public service, curiosity alone isn’t enough. Public leaders have to ask a deeper, harder question:

Who benefits from AI—and who bears its costs?

Because history is clear: new technologies rarely create new power structures. They tend to amplify the ones we already have.

The pattern we need to break

Across eras of industrial and technological change, extraction has followed a predictable arc:

value is taken from communities without power or recourse

shared resources are turned into private assets

those experiencing harm are the last to be heard and the least able to shape solutions

We’re watching that pattern reappear in the evolution of AI.

Data gathered from billions of people—writing, images, artwork, voice, community knowledge—is treated as “open” simply because it’s visible.

And once that value is extracted, the resulting systems are enclosed behind:

proprietary models

closed weights

sealed training data

aggressive IP

protections

limited auditability

opaque governance

Open for extraction.

Closed for accountability.

This isn’t a new failure of imagination. It’s a familiar one—and public leaders cannot afford to inherit it.

Our responsibility is to the public, not private interests, and the systems we allow today shape the legitimacy of public institutions tomorrow. As AI accelerates, that responsibility becomes even more urgent. We can’t afford to let old patterns harden into new defaults.

We explore similar historical patterns and their impact on public systems in our post on the Policy Implementation Gap, which examines what happens when systems evolve faster than accountability structures.

How extraction becomes a governance failure

For government and nonprofit teams, extractive AI models don’t just create ethical concerns.

Systems may reinforce existing inequities rather than reduce them.

People most affected by decisions may have the least visibility into how they’re made.

Agencies may rely on tools built on data or labor extracted without consent, undermining legitimacy.

Public trust erodes when communities feel acted upon—not included.

When AI systems shape eligibility, access, communications, or public experience, extractive models don’t just break design—they break democracy. As public servants—inside and outside of government—our work is to ensure these systems uphold the public good, not undermine it. That commitment is not theoretical; it’s part of the daily responsibility of anyone designing, funding, or delivering public systems.

We’ve written before about how public trust is shaped not just by services, but by the systems and decisions behind them. Our Trust Signals Scorecard offers practical ways agencies can strengthen legitimacy and transparency.

What public leaders can do next

Public-sector leaders don’t need to be AI experts. The complexities of model architecture or training data aren’t the barrier. We need to be stewards—clear about the values guiding our decisions and firm about the accountability those decisions require.

Here are practical first steps that help shift innovation from extraction to accountability:

Ask “who pays and who benefits?” at the problem-definition stage. Don’t wait until procurement ends or deployment to assess impact.

Require clarity on data provenance and consent. “Publicly available” is not the same as ethical, community-supported, or community-supporting.

Build governance before implementation. Define oversight, auditability, and redress mechanisms early—not after harm occurs.

Ensure communities most affected shape the solution. Move beyond consultation to real co-ownership wherever possible.

Evaluate vendors based on values, not just capability. Transparency, accountability, and alignment with mission should be procurement criteria.

Align innovation with public value, not vendor narratives. Scale and efficiency are not goals on their own. Legitimacy, safety, and equity are.

Stewardship isn’t the constraint on innovation. It’s what makes innovation sustainable, trustworthy, and genuinely impactful. And we can build that—together. Public leaders, nonprofit teams, designers, researchers, technologists, and community members each hold part of the responsibility and the possibility. When we choose clarity over convenience, accountability over speed, and community over extraction, we don’t just build better systems—we strengthen the public institutions people rely on every day.

For teams looking to deepen their capability in this area, our workshop Designing for the Public Good—Civic design for public sector leaders—introduces practical tools for aligning technology decisions with mission, equity, and community impact.

How Public Servants helps

Stewardship isn’t a constraint on innovation, it’s what makes it sustainable.

Public Servants works with government and nonprofit organizations to ensure that systems—AI-enabled or not—are built on public values from the inside out.

We help teams:

Build governance structures that embed accountability

Connect innovation decisions to mission outcomes

Design processes that center communities most impacted

Translate values like integrity, courage, and stewardship into everyday choices

Create clarity, transparency, and trust in digital public services

Our goal is simple: public systems that uplift, not extract.

If you’re exploring how governance and responsibility show up in public-sector technology, our post on Designing policy that doesn’t break delivery offers another angle on aligning innovation with public value.

Further reading

If you’d like to go deeper into the historical patterns behind this topic, I shared a longer reflection on LinkedIn that expands on these ideas: ”From curiosity to stewardship in AI.”